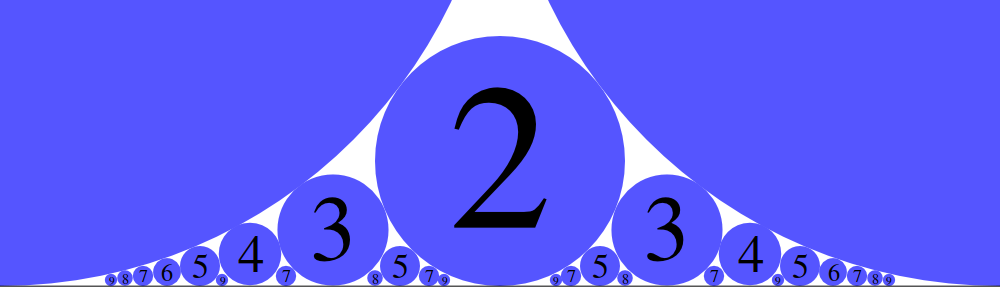

In my paper with Joshua Xiong, Nim Fractals, we produced a bijection between P-positions in the three-pile Nim and a three-branch Ulam-Warburton automaton. We also defined a parent-child relationship on games that is induced by this bijection. Namely, two consecutive P-positions in a longest optimal game of Nim are the ones that correspond to a parent-child pair in the automaton. A cell in the Ulam-Warburton automaton has exactly one parent. That means, if (a,b,c) is a Nim P-position, then exactly one of (a − 1,b − 1,c), (a − 1,b,c − 1), and (a,b − 1,c − 1) must be a P-position and a parent of (a,b,c). (See our paper for more details.)

Now I want to explicitly write out the rules of an automaton which will generate the Nim P-positions in 3D.

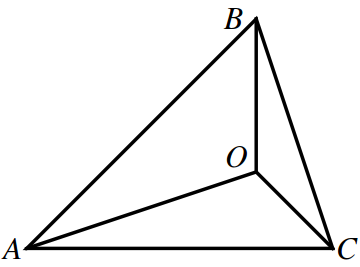

Let me restrict the evolution of the automaton to the non-negative octant. That is, we consider points (a,b,c) in 3D, where each coordinate is a non-negative integer. We define the neighbors of the point (a,b,c) to be the points that differ from (a,b,c) in two coordinates exactly by 1. So each point strictly inside the octant has 12 neighbors. (There are three ways to choose two coordinates, and after that four ways to choose plus or minus 1 in each of them.

There is a geometric interpretation to this notion of neighborhood. Let us correspond a unit cube to a point with integer coordinates. The center of the cube is located at the given point and the sides are parallel to the axes. Then two points are neighbors if and only if the corresponding cubes share one edge. Now it becomes more visual that a cube has 12 neighbors, as it has 12 edges.

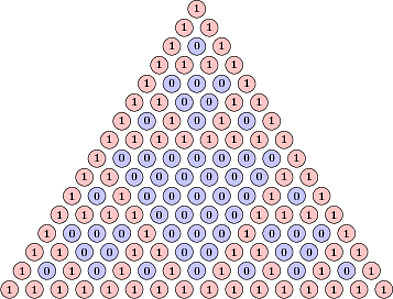

Here is the rule of the automaton. Points never die. We start with the patriarch, (0,0,0), one point being alive. The non-alive point is born inside the non-negative octant if it has exactly 1 alive neighbor that is closer to the patriarch. In other words the point (a,b,c) is born if and only if exactly one out of three points (a − 1,b − 1,c), (a − 1,b,c − 1), and (a,b − 1,c − 1) is alive. It follows that the points that are born at the n-th step has a coordinate sum 2n.

Consider for example the starting growth. At the first step the points (0,1,1), (1,0,1) and (1,1,0) are born. At the next step the points (0,2,2) and (2,0,2) and (2,2,0) are born. while the (1,1,2) will never be born as starting from the second step it has at least two live neighbors: (0,1,1) and (1,0,1) that are closer to the patriarch.

Theorem. In the resulting automaton, the points that are born at step n are exactly the P-positions of Nim with the total of 2n tokens.

Proof. Only the points with an even total can be born. Now we proceed by induction on the total number of tokens. The base case is obvious. Suppose we proved that at step n exactly P-positions with 2n tokens are born. Consider a P-position of Nim: (a,b,c) such that a + b + c = 2n + 2. Remember, that bitwise XOR of a, b, and c is zero. Consider the 2-adic values of a, b, and c (aka the smallest powers of 2 dividing a, b, and c). There should be exactly two out of these three integers that have the smallest 2-adic value. Suppose these are a and b. Then (a − 1,b − 1,c) is a P-position, while (a − 1,b,c − 1) and (a,b − 1,a − 1) are not. That means by the inductive hypothesis (a,b,c) has exactly one alive neighbor. So the position (a,b,c) is born at time n + 1.

Now we need to proof that nothing else is born. For the sake of contradiction suppose that (a,b,c) is the earliest N-position to be born. That means it has a live neighbor that is a P-position closer to the patriarch. WLOG we can assume that this neighbor is (a − 1,b − 1,c).

If a − 1 and b − 1 are both even, then (a,b,c) is a P-position, which is a contradiction. Suppose a − 1 and b − 1 are both odd. Then their binary representations can’t have the same number of ones at the end. Otherwise, (a,b,c) is a P-position. That is a and b have different 2-adic values. Suppose a has a smaller 2-adic value, Then, for (a − 1,b − 1,c) to be a P-position a and c has to have the same 2-adic value. That means (a,b − 1,c − 1) is a P-position too. Now suppose a − 1 and b − 1 are of different parities. Without loss of generality suppose a − 1 is odd and b − 1 is even, then c is odd. Then (a − 1,b,c − 1) is a P-position too. Thus we can always find a second neighbor with the same number of tokens. That is, both neighbors are alive at the same time; and the N-position (a,b,c) is never born. □

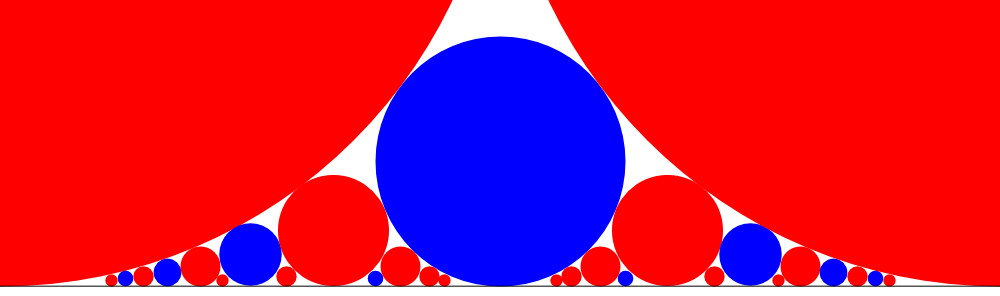

One might wonder what happens if we relax the automaton rule by removing the constraint on the distance to the patriarch. Suppose a new point is born if it has exactly one neighbor alive. This will be a different automaton. Let us look at the starting growth, up to a permutation of coordinates. At step one, positions (0,1,1) are born. At the next step positions (0,2,2) are born. At the next step positions (0,1,3), (1,2,3) and (0,3,3) are born. We see that (0,1,3) is not a P-positions. What will happen later? Will this N-position mess up the future positions that are born? Actually, this automaton will still contain all the P-positions of Nim.

Theorem. In the new automaton, the points that are born at step n and have total of 2n tokens are exactly the P-positions of Nim with the total of 2n tokens.

Proof. Only the points with an even total can be born. Now we proceed by induction on the total number of tokens. The base case is obvious. The birth of the points that have total of 2n tokens and are born at step n depend only on the points with the total of 2n − 2 tokens that are born at step n − 1. By the inductive hypothesis, those are the P-positions with 2n − 2 tokens. So the points have total of 2n tokens and are born at step n match exactly the first automaton described above. To reiterate, N-positions with 2n tokens are born after P-positions with 2n tokens, so they do not influence the birth of P-positions with 2n + 2 tokens. □

Share:

I received a book

I received a book