Background and Definitions

I’ve heard about many mathematicians running polymath projects through their blogs. I wasn’t planning to do that. It just happened. In this essay, I describe the collaborative effort that was made to solve the following problem that appeared in my blog on July 2009:

Baron Münchhausen has n identical-looking coins weighing 1, 2, …, n grams. The Baron’s guests know that he has this set of coins, but do not know which one is which. The Baron knows which coin is which and wants to demonstrate to his guests that he is right. He plans to conduct weighings on a balance scale, so that the guests will be convinced about the weight of every of coin. What is the smallest number of weighings that the Baron must do in order to reveal the weights?

The sequence a(n) of the minimal number of weighings is called the Baron Münchhausen’s omni-sequence to distinguish it from the Existential Baron’s sequence where he needs the smallest amount of weighings to prove the weight of one coin of his choosing.

In this essay I will describe efforts to calculate a(n). The contributors are: Max Alekseyev, Ilya Bogdanov, Maxim Kalenkov, Konstantin Knop, Joel Lewis and Alexey Radul.

Starting Examples: n = 1, n = 2 and n = 3

The sequence starts as a(1) = 0, because there is nothing to demonstrate. Next, a(2) = 1, since with only one weighing you can find which coin is lighter.

Next, a(3) = 2. Indeed you can’t prove all the coins in one weighing, but in the first weighing you can show that the 1-gram coin is lighter than the 2-gram coin. In the second weighing you can show that the 2-gram coin is lighter than the 3-gram coin. Thus, in two weighings you can establish an order of weights and prove the weight of all three coins.

n = 4 and the Tightness Conjecture

As you can see in the case of n = 3, you can compare coins in order and prove the weight of all the coins in n − 1 weighings. But this is not at all the optimal number. Let us see why a(4) = 2. In his first weighing the Baron can put the 1- and the 2-gram coins on the left pan of the balance and the 4-gram coin on the right pan. In the future, I will just describe that weighing as 1 + 2 < 4. This way everyone agrees that the coin on the right pan is 4 grams, and the coin that is left out is 3 grams. The only thing that is left to do is to compare the 1-gram and the 2-gram coins in the second weighing.

Later Konstantin Knop sent me a different solution for n=4. His solution provides an interesting example. While looking for solutions, people usually try to have an unbalanced weighing to be “tight”. That is, they make it so that the heavier cup is exactly 1 gram heavier than the lighter cup. If you are trying to prove one coin in one weighing, “tightness” is a requirement. But it is not necessary when you have several weighings. Here is the first weighing in Konstantin’s solution: 1 + 3 = 4; and his second second weighing is: 1 + 2 < 3 + 4. We see that the second weighing has a weight difference of four between pans.

n = 5 and n = 6

Next, a(5) = 2. We can have the first weighing the same as before: 1 + 2 < 4, and the second weighing: 1 + 4 = 5. The second weighing confirms that the heavy coin on the right pan in the first weighing can’t be the heaviest one, thus it has to be the 4-gram coin. After that you can see that every coin is identified.

Next, a(6) = 2. The first weighing, 1 + 2 + 3 = 6, divides all coins into three groups: {1,2,3}, {4,5} and {6}. We know to which group each coin belongs, but we do not know which coin in the group is which. The second weighing: 1 + 6 < 3 + 5, identifies every coin. Indeed, the only possibility for the left side to weigh less than the right side is when the smallest weighing coin from the first group and 6 are on the left, and the two largest weighing coins from the first two groups are on the right.

The Lower Bound and n = 10, n = 11

When I was writing my essay I suspected that n = 6 is the largest number for which a solution can be established in two weighings, but I didn’t have any proof. So I was embarrassed to show my solutions of three weighings n equals 7, 8 and 9.

On the other hand I published the solutions suggested by my son, Alexey Radul, for n = 10 and n = 11. In these cases the theoretical lower bound of log3(n) for a(n) is equal to 3, and finding solutions in three weighings was enough to establish the value of the sequence a(n) for n = 10 and n = 11.

So, a(10) = 3, and here are the weighings. The first weighing is 1 + 2 + 3 + 4 = 10. After this weighing, we can divide the coins into three groups {1,2,3,4}, {5,6,7,8,9} and {10}. The second weighing is 1 + 5 + 10 < 8 + 9. After the second weighing we can divide all coins into groups we know they belong to: {1}, {2,3,4}, {5}, {6,7}, {8,9} and {10}. The last weighing contains the lowest weighing coin from each non-single-coin group on the left and the largest weighing coin on the right, plus, in order to balance them, the coins whose weights we know. The last weighing is 2 + 6 + 8 + 5 = 4 + 7 + 9 + 1.

Similarly, a(11) = 3, and the weighings are: 1 + 2 + 3 + 4 < 11; 1 + 2 + 5 + 11 = 9 + 10; 6 + 9 + 1 + 3 = 8 + 4 + 2 + 5.

An Exhaustive Search and a Mystery Solution for n = 6

After publishing my blog I wrote a letter to the Sequence Fans mailing list asking them to expand the sequence. Max Alekseyev replied with the results of an exhaustive search program he wrote. First of all, he found a counter-intuitive solution for n=6. Namely, the following two weighings: 1 + 3 < 5 and 1 + 2+ 5 < 3 + 6. He also confirmed that it is not possible to identify the coins in two weighings for n=7, n=8 and n=9.

Many Interesting Examples for n = 7

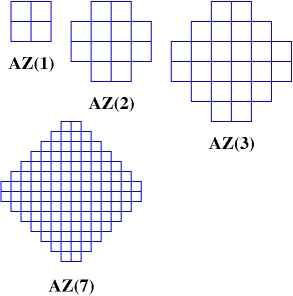

So now I can stop being embarrassed and proudly present my solution for n=7 in three weighings. That is, a(7) = 3 and the first weighing is: 1+2+3 < 7, and it divides all the coins into three groups {1,2,3}, {4,5,6} and 7. The second weighing, 1 + 4 < 6, divides them even further. Now we know the identity of every coin except the group {2,3}, which we can disambiguate with the third weighing: 2 < 3.

In many solutions that I’ve seen, one of the weighings was very special: every coin on one cup was lighter than every coin on the other cup. I wondered if that was always the case. Konstantin Knop send me a counterexample for n=7. The first weighing is: 1 + 2 + 3 + 5 = 4 + 7. The second is: 1 + 2 + 4 < 3 + 5. The third is: 1 + 3 + 4 = 2 + 6.

Later Max Alekseyev sent me two more special solutions for n=7. The first one contains only equalities: 2 + 5 = 7; 1 + 2 + 4 = 7; 1 + 2 + 3 + 5 = 4 + 7. The second one contains only inequalities: 1 + 3 < 5; 1 + 2 + 5 < 3 + 6; 5 + 6 < 2 + 3 + 7.

n = 8

Moving to the next index, a(8) = 3 and the first weighing is: 1 + 2 + 3 + 4 + 5 < 7 + 8. The second weighing is: 1 + 2 + 5 < 4 + 6. After that we have identified all coins but two groups {1,2} and {3,4} that can be resolved by 2 + 4 = 6.

More Examples and a Paper

Meanwhile my blog received a comment from Konstantin Knop who claimed that he found solutions in three weighings for n in the range between 12 and 17 inclusive and four weighings for n = 53. I had already corresponded with Konstantin and knew that his claims are always well-founded, so I didn’t doubt that he had found the solutions.

Later I began to write a paper with Joel Lewis on the upper bound of the omni-sequence, where we prove that a(n) ≤ 2 ⌈log2n⌉. For this paper, we wanted a comprehensive set of examples, so I emailed Konstantin asking him to write up his solutions. He promptly sent me the results and mentioned that he had found the weighings together with Ilya Bogdanov. They used several different ideas in the solutions. First I’ll describe their solutions based on ideas we’ve already seen, namely to compare the lightest coins in the range to the heaviest coins.

n = 13 and n = 15

Here is the proof that a(13) = 3. The first weighing is: 1 + … + 8 = 11 + 12 + 13, and it identifies the groups {1, 2, 3, 4, 5, 6, 7, 8}, {9, 10} and {11, 12, 13}. The second weighing is: (1 + 2 + 3) + 9 + (11 + 12) = (7 + 8) + 10 + 13, and it divides them further into groups {1, 2, 3}, {4, 5, 6}, {7, 8}, {9}, {10}, {11, 12}, {13}. And the last weighing identifies all the coins: 1 + 4 + 7 + 11 + 9 + 10 = 3 + 6 + 8 + 12 + 13.

Similarly, let us show that a(15) = 3. The first weighing is: 1 + … + 7 < 14 + 15, and it divides the coins into three groups {1, 2, 3, 4, 5, 6, 7}, {8, 9, 10, 11, 12, 13}, and {14, 15}. The second weighing is: (1 + 2 + 3) + 8 + (14 + 15) = (5 + 6 + 7) + (12 + 13), and this divides them further into groups {1, 2, 3}, {4}, {5, 6, 7}, {8}, {9, 10, 11}, {12, 13} and {14, 15}. The third weighing identifies every coin: 1 + 5 + 8 + 9 + 12 + 14 = 3 + 7 + 11 + 13 + 15.

n = 9 and n = 12: Heaviest vs Lightest. Almost, but not Quite

As I mentioned earlier it is not always possible to find the first weighing which will nicely divide the coins into groups. We already discussed an example, n = 5, in which neither of the two weighings divided the coins into groups. Likewise, the same thing happened in the second mysterious solution for n = 6. What these solutions have in common is that the first weighing nearly divides everything nicely. The left pan is almost the set of the lightest coins and the right pan is almost the set of the heaviest coins. But not quite.

That is not our only situation in which the first weighing does not quite divide the coins into groups. For example, here is Konstantin’s solution for a(9) = 3. For the first weighing, we put five coins on the left pan and two coins on the right pan. The left pan is lighter. This could happen in three different ways:

- 1 + 2 + 3 + 4 + 5 < 8 + 9 (out 6 and 7)

- 1 + 2 + 3 + 4 + 5 < 7 + 9 (out 6 and 8)

- 1 + 2 + 3 + 4 + 6 < 8 + 9 (out 5 and 7)

The second weighing, 1 + 2 + 3 = 6, in which we took three coins from the left pan and balanced them against one coin – again from the left pan – could only happen in case “C.” After the two weighings, the following groups were identified: {1, 2, 3}, {4}, {5, 7}, {6}, {8, 9}. The third weighing, 1 + 4 + 5 + 8 < 3 + 7 + 9, identifies all the coins.

A similar technique is used in the solution that Konstantin sent to us to demonstrate that a(12) = 3. The first weighing is: 1 + 2 + 3 + 4 + 5 + 6 < 10 + 12. The audience which sees the results of the weighings understands that there are three possibilities for the distribution of coins:

- 1 + 2 + 3 + 4 + 5 + 6 < 10 + 12

- 1 + 2 + 3 + 4 + 5 + 6 < 11 + 12

- 1 + 2 + 3 + 4 + 5 + 7 < 11 + 12

The second weighing, (1 + 2 + 3) + (7 + 8) + 10 < (9 + 11 ) + 12, convinces the audience that the left pan must weigh at least 31 if the first weighing was case “A” above (31 = 1 + 2 + 3 + 7 + 8 + 10) or “C” (31 = 1 + 2 + 3 + 6 + 8 + 11), and at least 32 (32 = 1 + 2 + 3 + 7 + 8 + 11) if the first weighing was case “B.” At the same time the right pan is not more than 12 + 9 + 11 = 32 for case “A” above, not more than 12 + 9 + 10 = 31 for case “B” and not more than 12 + 9 + 10 = 31 for case “C.”

Hence the inequality in the second weighing is only possible when the first weighing was indeed as described by case “A” above. Consequently, the first two weighings together identify groups: {1, 2, 3}, {4, 5, 6}, {7, 8}, {9}, {10}, {11} and {12}. The third weighing, 1 + 4 + 7 + 11 + 12 < 3 + 6 + 8 + 9 + 10, identifies all the coins.

Rearrangement Inequality: n = 6, n = 14, n = 16, n = 17 and n = 53

Other cases that Konstantin Knop sent me used a completely different technique. I would like to explain this technique using the mysterious solution for n = 6 found by Max Alekseyev. Suppose we have six coins labeled c1, … c6. The first weighing is: c1 + c3 < c5. The second weighing is: c1 + c2 + c5 < c3 + c6.

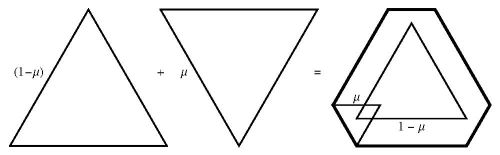

Let us prove that these two weighings identify all the coins. Let us replace the two inequalities above with the following: c1 + c3 − c5 ≤ −1, and c1 + c2 + c5 − c3 − c6 ≤ −1. Now we multiply the first inequality by 3 and the second by 2 and sum the results. We get: 5c1 + 2c2 + c3 + 0c4 − c5 − 2c6 ≤ −5. Note that the coefficients for labels are in a decreasing order. By the rearrangement inequality the smallest value the expression 5c1 + 2c2 + c3 + 0c4 − c5 − 2c6 reaches is when the labels on the coins match the indices. This smallest value is −5. Hence, the labels have to match the coins.

The technique that Konstantin and his collaborators are using is to search for appropriate coefficients to multiply the weighings by, rather than searching for the weighings themselves. In lieu of lengthy explanations, I will just list the weighings that he uses together with coefficients to multiply them by for their proof that the weighings differentiate coins.

We will start with showing that a(14) = 3. The weighings are: 1 + 2 + 3 + 4 + 5 + 6 + 7 + 9 < 11 + 13 + 14, and: 1 + 2 + 3 + 8 + 11 + 13 = 7 + 9 + 10 + 12, followed by 1 + 4 + 7 + 10 = 3 + 6 + 13. The coefficients to multiply by are {9, 5, 2}.

Next we will show that a(16) = 3. The weighings are: 1 + 2 + 3 + 4 + 5 + 6 + 8 < 14 + 16, and 1 + 2 + 3 + 7 + 9 + 14 = 8 + 13 + 15, followed by 1 + 4 + 7 + 10 + 13 < 3 + 6 + 12 + 15. The coefficients to multiply by are {11, 5, 2}.

Next we will show that a(17) = 3. The weighings are: 1 + 2 + 3 + 4 + 5 + 6 + 7 + 9 + 10 < 15 + 16 + 17, and 1 + 2 + 3 + 8 + 11 + 15 + 16 < 7 + 9 + 10 + 14 + 17, and 1 + 4 + 7 + 8 + 12 + 14 = 3 + 6 + 10 + 11 + 16. The coefficients to multiply by are {11, 5, 2}.

Next we will show that a(53) = 4. The weighings are: (1 + 2 + … + 23) + 25 < 47 + (49 + … + 53), and (1 + … + 9) + 24 + (26+ … + 31) + 47 + (49 + … + 52) < (16 + … + 23) + 25 + (41 + … + 46) + 48 + (51 + 52), and (1 + 2 + 3) + (10 + 11) + (16 + 17 + 18) + 24 + (26 + 27) + (32 + 33 + 34) + (41 + 42 + 43) + 47 + 49 + 53 =(7 + 8 + 9) + 15 + (22 + 23) + 25 + (30 + 31) + (38 + 39) + 40 + (45 + 46) + 48 + (51 + 52), and the last one 1 + 4 + 7 + 10 + 12 + 16 + 19 + 22 + 24 + 28 + 30 + 32 + 35 + 38 + 41 + 45 + 47 + 51 + 53 < 3 + 6 + 9 + 11 + 14 + 18 + 21 + 25 + 27 + 29 + 34 + 37 + 40 + 43 + 48 + 49 + 50 + 52. The coefficients to multiply by are {43, 15, 5, 2}.

The Search Continues for n = 18 and n = 19

When I was working on the paper with Joel Lewis I re-established my email discussions about the Baron’s onmi-sequence with Konstantin Knop. At that time Konstantin’s colleague, Maxim Kalenkov, got interested in the subject and wrote a computer search program to find other solutions that can be proven with the rearrangement inequality. Thus, we know two more terms of this sequence.

The next known term is a(18) = 3. The weighings are: 1 + 2 + 4 + 5 + 7 + 10 + 12 = 9 + 15 + 17, and 1 + 3 + 4 + 6 + 9 + 11 + 17 = 7 + 12 + 14 + 18, and 2 + 3 + 7 + 8 + 9 + 14 + 15 = 4 + 10 + 11 + 16 + 17. The corresponding coefficients are: {8, 7, 5}.

Similarly, a(19) = 3. The weighings are: 1 + 2 + 3 + 4 + 5 + 7 + 8 + 10 + 13 = 16 + 18 + 19, and 1 + 2 + 3 + 6 + 9 + 11 + 16 = 8 + 10 + 13 + 17, and 1 + 4 + 6 + 8 + 12 + 18 = 3 + 7 + 11 + 13 + 15. The coefficients are {12, 7, 3}.

Solutions in Four Weighing for n from 20 to 58

Maxim Kalenkov continued his search. He didn’t find any new solutions in three weighings, but he found a lot of solutions in four weighings, namely for numbers from 20 to 58. Below are his solutions, with multiplier coefficients in front of every weighing:

a(20) ≤ 4

18: 1+2+3+4+5+10+14+16+18 = 6+7+11+12+17+20

19: 1+2+4+5+12+15+17+19 < 6+8+10+14+18+20

21: 2+6+11+17+18 = 4+9+10+15+16

26: 1+6+7+8+9+10+20 = 2+5+17+18+19

a(21) ≤ 4

18: 3+5+6+11+15+17+19 = 7+8+9+13+18+21

19: 4+6+9+13+16+18+20 = 1+7+11+12+15+19+21

21: 1+4+5+7+12+18+19 = 3+9+10+11+16+17

26: 1+2+3+7+8+9+10+11+21 = 4+5+6+18+19+20

a(22) ≤ 4

18: 3+5+6+12+15+17+20 = 7+8+10+13+18+22

19: 1+2+4+10+13+16+18+21 = 7+9+12+15+20+22

21: 1+6+7+18+19+20 = 2+3+10+11+12+16+17

26: 2+3+7+8+9+10+11+12+22 = 6+18+19+20+21

a(22) ≤ 4

18: 1+2+5+6+12+16+18+22 < 3+7+8+10+13+19+23

19: 1+3+4+6+10+17+19+21 = 7+9+12+14+16+23

21: 3+7+13+14+19+20 = 2+6+10+11+12+17+18

26: 2+7+8+9+10+11+12+23 = 19+20+21+22

a(24) ≤ 4

18: 1+3+6+12+17+19+21+23 < 2+4+7+8+10+13+15+20+24

19: 1+2+4+5+6+10+15+18+20+22 < 7+9+12+14+17+21+24

21: 4+7+13+14+20+21 = 3+6+10+11+12+18+19

26: 2+3+7+8+9+10+11+12+24 = 20+21+22+23

a(25) ≤ 4

18: 1+2+3+5+6+8+9+14+17+19+22 < 10+12+16+20+24+25

19: 2+6+7+9+12+16+18+20+23 = 10+11+14+15+17+22+24

21: 1+2+4+7+8+10+15+20+21+22 = 3+6+12+13+14+18+19+25

26: 3+10+11+12+13+14+24+25 = 2+7+8+9+20+21+22+23

a(26) ≤ 4

18: 1+2+3+5+6+8+9+14+18+20+23 = 10+12+15+21+25+26

19: 2+3+4+6+7+9+12+19+21+24 = 11+14+16+18+23+25

21: 7+8+15+16+21+22+23 = 2+6+12+13+14+19+20+26

26: 1+2+10+11+12+13+14+25+26 = 7+8+9+21+22+23+24

a(27) ≤ 4

18: 1+3+4+6+7+8+9+14+19+21+23+25 < 10+11+13+15+17+22+26+27

19: 1+2+3+5+7+9+13+17+20+22+24 < 4+10+12+14+16+19+23+26

21: 2+3+4+8+10+15+16+22+23 = 1+7+13+14+20+21+27

26: 1+10+11+12+13+14+26+27 = 3+8+9+22+23+24+25

a(28) = 4

18: 3+6+8+9+10+15+19+21+24+26 = 1+5+11+13+16+18+22+27+28

19: 1+4+5+7+9+10+13+18+20+22+25 = 3+6+11+12+15+17+19+24+27

21: 5+6+11+16+17+22+23+24 = 4+9+13+14+15+20+21+28

26: 1+2+3+4+11+12+13+14+15+27+28 = 10+22+23+24+25+26

a(29) = 4

18: 1+3+5+6+7+9+10+16+20+22+25+27 < 11+12+14+17+18+23+28+29

19: 4+8+10+14+18+21+23+26 = 2+3+6+11+13+16+20+25+28

21: 1+2+6+8+9+11+17+23+24+25 = 4+5+14+15+16+21+22+29

26: 2+3+4+5+11+12+13+14+15+16+28+29 = 8+9+10+23+24+25+26+27

a(30) = 4

18: 2+8+10+16+21+23+26+27+28 = 5+11+12+14+17+19+24+29+30

19: 2+4+5+7+9+14+19+22+24+28 = 11+13+16+18+21+26+29

21: 1+5+6+9+10+11+17+18+24+25+26 = 4+14+15+16+22+23+28+30

26: 1+3+4+11+12+13+14+15+16+29+30 < 9+10+24+25+26+27+28

a(31) = 4

18: 1+2+6+9+10+16+21+23+26+28+29 < 3+4+7+11+12+14+17+19+24+30+31

19: 1+2+4+7+14+19+22+24+27+29 < 6+9+11+13+16+18+21+26+30

21: 2+3+7+8+9+11+17+18+24+25+26 = 14+15+16+22+23+29+31

26: 1+3+4+5+6+11+12+13+14+15+16+30+31 = 2+24+25+26+27+28+29

a(32) = 4

18: 1+5+8+9+10+12+13+22+24+27+29 = 6+14+16+18+20+25+30+31

19: 4+6+10+11+13+16+20+23+25+28 = 1+2+8+15+19+22+27+30+32

21: 1+2+6+7+8+11+12+18+19+25+26+27 = 4+5+10+16+17+23+24+31+32

26: 1+2+3+4+5+14+15+16+17+30+31+32 < 11+12+13+25+26+27+28+29

a(33) = 4

18: 1+2+6+7+8+9+10+12+13+23+27+29+30 = 3+14+15+17+19+21+25+31+32

19: 1+2+10+11+13+17+21+24+25+28+30 = 4+6+8+14+16+20+23+27+31+33

21: 1+3+4+8+11+12+14+19+20+25+26+27 < 7+10+17+18+24+30+32+33

26: 3+4+5+6+7+14+15+16+17+18+31+32+33 = 11+12+13+25+26+27+28+29+30

a(34) = 4

18: 2+3+4+8+10+12+13+19+24+28+30+31 < 6+14+15+17+20+22+26+32+33

19: 1+2+3+5+6+9+11+13+17+22+25+26+29+31 = 4+8+14+16+19+21+24+28+32+34

21: 1+3+6+7+8+11+12+14+20+21+26+27+28 = 2+5+17+18+19+25+31+33+34

26: 1+2+4+5+14+15+16+17+18+19+32+33+34 = 3+11+12+13+26+27+28+29+30+31

a(35) = 4

18: 1+3+4+6+8+10+11+13+19+24+26+29+31+32 = 14+15+17+20+22+27+33+34+35

19: 1+4+7+9+11+12+17+22+25+27+30+32 = 6+14+16+19+21+24+29+33+35

21: 2+12+13+14+20+21+27+28+29+35 = 4+7+8+11+17+18+19+25+26+32+34

26: 1+2+3+4+5+6+7+8+14+15+16+17+18+19+33+34 = 12+13+27+28+29+30+31+32

a(36) = 4

18: 1+2+3+4+5+9+11+12+14+15+20+25+29+31+32 < 6+16+18+21+23+27+33+34+36

19: 1+2+4+5+6+8+10+12+13+15+18+23+26+27+30+32 < 16+17+20+22+25+29+33+35+36

21: 1+3+5+13+14+16+21+22+27+28+29+36 = 2+8+9+12+18+19+20+26+32+34+35

26: 2+6+7+8+9+16+17+18+19+20+33+34+35 = 5+13+14+15+27+28+29+30+31+32

a(37) = 4

18: 1+2+3+6+8+10+12+13+15+16+21+27+30+32+33 = 4+9+17+19+22+24+28+34+35+37

19: 1+2+3+7+9+11+13+14+16+19+24+26+28+31+33 = 5+6+10+17+18+21+23+30+34+36+37

21: 3+4+5+9+10+14+15+17+22+23+28+29+30+37 = 1+7+8+13+19+20+21+26+27+33+35+36

26: 1+4+5+6+7+8+17+18+19+20+21+34+35+36 = 3+14+15+16+28+29+30+31+32+33

a(38) = 4

18: 2+3+4+7+9+11+13+15+16+21+26+28+31+33+34 = 5+10+17+18+19+22+24+29+35+36+38

19: 1+3+4+8+10+12+13+14+16+19+24+27+29+32+34 = 7+11+17+21+23+26+31+35+37+38

21: 1+2+4+5+10+11+14+15+17+22+23+29+30+31+38 = 8+9+13+19+20+21+27+28+34+36+37

26: 5+6+7+8+9+17+18+19+20+21+35+36+37 = 4+14+15+16+29+30+31+32+33+34

a(39) = 4

18: 1+2+4+7+9+11+13+14+16+22+27+29+32+34+35 = 10+17+18+20+23+25+30+36+38+39

19: 2+3+4+8+10+12+14+15+20+25+28+30+33+35 = 5+7+11+17+19+22+24+27+32+37+38

21: 1+2+3+5+10+11+15+16+17+23+24+30+31+32+38 < 8+9+14+20+21+22+28+29+35+36+37

26: 1+5+6+7+8+9+17+18+19+20+21+22+36+37 = 15+16+30+31+32+33+34+35

a(40) = 4

18: 1+2+3+4+5+8+9+12+14+15+17+18+23+27+29+32+34+35 < 7+10+19+21+24+26+30+36+37+39+40

19: 1+3+4+5+7+10+13+15+16+18+21+26+28+30+33+35 < 6+8+12+20+23+25+27+32+36+38+39

21: 1+5+6+10+11+12+16+17+24+25+30+31+32+39 < 3+9+15+21+22+23+28+29+35+37+38

26: 2+3+6+7+8+9+19+20+21+22+23+36+37+38 = 5+16+17+18+30+31+32+33+34+35

a(41) = 4

18: 1+3+5+6+8+10+12+14+15+17+18+23+28+30+33+35+36 < 7+11+19+21+24+26+31+37+38+40+41

19: 1+2+4+5+6+7+9+11+13+15+16+18+21+26+29+31+34+36 = 8+12+19+20+23+25+28+33+37+39+40

21: 1+4+6+11+12+16+17+19+24+25+31+32+33+40 < 9+10+15+21+22+23+29+30+36+38+39

26: 2+3+7+8+9+10+19+20+21+22+23+37+38+39 = 6+16+17+18+31+32+33+34+35+36

a(42) = 4

18: 1+3+5+6+7+11+13+15+16+18+24+29+31+34+36+37 = 2+8+12+19+20+22+25+27+32+38+40+41

19: 1+2+4+6+7+10+12+14+16+17+18+22+27+30+32+35+37 = 3+13+19+21+24+26+29+34+39+40+42

21: 1+2+3+4+5+7+8+12+13+17+19+25+26+32+33+34+40 = 10+11+16+22+23+24+30+31+37+38+39

26: 2+3+8+9+10+11+19+20+21+22+23+24+38+39 = 7+17+18+32+33+34+35+36+37

a(43) = 4

18: 1+2+5+6+7+10+12+14+16+17+19+20+29+31+34+36+37 = 8+21+23+25+27+32+38+39+41+42

19: 2+4+5+6+7+8+11+15+17+18+20+23+27+30+32+35+37 = 10+14+22+26+29+34+38+40+41+43

21: 1+2+3+7+13+14+18+19+25+26+32+33+34+41 < 5+11+12+17+23+24+30+31+37+39+40

26: 1+3+4+5+8+9+10+11+12+21+22+23+24+38+39+40 < 7+18+19+20+32+33+34+35+36+37

a(44) = 4

18: 1+2+5+6+7+8+10+11+14+16+17+19+20+25+30+32+35+37+38 = 3+12+21+22+23+26+28+33+39+40+42+44

19: 1+2+3+7+8+12+15+17+18+20+23+28+31+33+36+38+44 = 9+10+14+21+25+27+30+35+39+41+42+43

21: 2+3+4+6+8+9+12+13+14+18+19+21+26+27+33+34+35+42 = 11+17+23+24+25+31+32+38+40+41+44

26: 1+3+4+5+9+10+11+21+22+23+24+25+39+40+41 = 8+18+19+20+33+34+35+36+37+38

a(45) = 4

18: 2+4+5+6+11+13+16+17+18+20+21+26+31+33+36+38+39 = 7+9+14+22+24+27+29+34+40+42+43+45

19: 1+2+4+6+9+12+14+18+19+21+24+29+32+34+37+39+45 = 8+11+16+23+26+28+31+36+40+41+42+44

21: 1+2+3+5+7+8+14+15+16+19+20+27+28+34+35+36+42 = 4+12+13+18+24+25+26+32+33+39+41+45

26: 1+3+4+7+8+9+10+11+12+13+22+23+24+25+26+40+41 = 19+20+21+34+35+36+37+38+39

a(46) = 4

18: 1+2+3+5+6+8+9+14+16+17+19+21+22+26+31+33+36+38+39 = 10+12+23+27+29+34+40+41+42+43+45

19: 2+4+6+7+8+9+12+15+18+20+22+29+32+34+37+39+45 = 3+11+14+17+23+24+26+28+31+36+40+42+44

21: 1+3+7+9+10+11+17+20+21+23+27+28+34+35+36+42 = 6+15+16+25+26+32+33+39+41+45+46

26: 1+2+3+4+5+6+10+11+12+13+14+15+16+23+24+25+26+40+41 = 9+20+21+22+34+35+36+37+38+39

a(47) = 4

18: 2+3+5+7+8+9+13+14+16+18+19+21+22+27+32+34+37+39+40 < 11+23+24+28+30+35+41+42+43+44+46

19: 1+3+6+7+8+9+11+17+19+20+22+30+33+35+38+40+46 < 5+10+13+16+23+25+27+29+32+37+41+43+45

21: 1+2+4+5+9+10+15+16+20+21+23+28+29+35+36+37+43 < 7+14+19+26+27+33+34+40+42+46+47

26: 1+2+3+4+5+6+7+10+11+12+13+14+23+24+25+26+27+41+42 < 9+20+21+22+35+36+37+38+39+40

a(48) = 4

18: 3+5+6+7+8+13+17+19+20+22+23+28+33+35+38+40+41 < 9+11+15+24+26+29+31+36+42+44+45+47

19: 1+4+6+8+11+14+15+18+20+21+23+26+31+34+36+39+41+47 < 3+10+13+17+24+25+28+30+33+38+42+43+44+46

21: 1+2+3+7+8+9+10+15+16+17+21+22+24+29+30+36+37+38+44 = 6+14+20+26+27+28+34+35+41+43+47+48

26: 1+2+3+4+5+6+9+10+11+12+13+14+24+25+26+27+28+42+43 = 8+21+22+23+36+37+38+39+40+41

a(49) = 4

18: 2+3+6+7+8+9+10+15+18+20+21+23+24+29+34+38+40+41+49 < 12+16+25+26+30+32+36+42+43+44+45+47

19: 1+3+5+7+9+10+12+14+16+19+21+22+24+32+35+36+39+41+47 < 11+18+25+27+29+31+34+38+42+44+46+49

21: 1+2+3+4+8+10+11+16+17+18+22+23+25+30+31+36+37+38+44 < 7+14+15+21+28+29+35+41+43+47+48+49

26: 1+2+4+5+6+7+11+12+13+14+15+25+26+27+28+29+42+43 = 10+22+23+24+36+37+38+39+40+41

a(50) = 4

18: 1+2+3+4+6+7+9+10+11+15+16+19+20+21+23+24+34+36+39+41+42+50 = 12+17+25+26+28+30+32+37+43+44+45+46+48

19: 2+3+5+7+8+10+11+17+21+22+24+28+32+35+37+40+42+48 = 4+13+15+19+25+27+31+34+39+43+45+47+50

21: 1+3+4+8+9+11+12+13+17+18+19+22+23+25+30+31+37+38+39+45 = 7+16+21+28+29+35+36+42+44+48+49+50

26: 1+2+4+5+6+7+12+13+14+15+16+25+26+27+28+29+43+44 = 11+22+23+24+37+38+39+40+41+42

a(51) = 4

18: 2+3+4+6+8+10+11+15+17+19+20+21+23+24+30+35+37+40+42+43+50 < 12+13+18+25+26+28+31+33+38+44+46+47+49+51

19: 1+3+4+7+9+11+13+16+18+21+22+24+28+33+36+38+41+43+49 < 6+15+19+25+27+30+32+35+40+45+46+48+50

21: 1+2+4+5+6+9+10+11+12+18+19+22+23+25+31+32+38+39+40+46+51 < 16+17+21+28+29+30+36+37+43+44+45+49+50

26: 1+2+3+5+6+7+8+12+13+14+15+16+17+25+26+27+28+29+30+44+45 < 11+22+23+24+38+39+40+41+42+43+51

a(52) = 4

18: 2+5+7+8+10+11+12+17+19+22+25+26+31+35+37+40+42+43+51 = 3+13+15+20+27+28+29+32+38+44+46+47+49+52

19: 1+2+3+6+8+9+11+12+15+18+20+23+24+26+29+36+38+41+43+49 = 5+14+17+22+27+31+33+35+40+45+46+48+51

21: 1+3+4+5+9+10+12+13+14+20+21+22+24+25+27+32+33+38+39+40+46+52 = 8+18+19+29+30+31+36+37+43+44+45+49+50+51

26: 1+2+3+4+5+6+7+8+13+14+15+16+17+18+19+27+28+29+30+31+44+45 = 12+24+25+26+38+39+40+41+42+43+52

a(53) = 4

18: 2+3+4+7+8+9+10+11+12+17+19+21+23+25+26+31+36+38+41+43+44+52 < 5+13+15+27+29+32+34+39+45+46+47+48+50+53

19: 1+3+4+5+9+11+12+15+18+22+23+24+26+29+34+37+39+42+44+50 = 7+14+17+21+27+28+31+33+36+41+45+47+49+52

21: 1+2+4+5+6+7+10+12+13+14+20+21+24+25+27+32+33+39+40+41+47+53 < 9+18+19+23+29+30+31+37+38+44+46+50+51+52

26: 1+2+3+5+6+7+8+9+13+14+15+16+17+18+19+27+28+29+30+31+45+46 = 12+24+25+26+39+40+41+42+43+44+53

a(54) = 4

18: 2+3+4+6+8+9+11+12+13+18+20+23+25+26+28+29+33+37+39+41+42+43+51 = 5+14+16+21+30+31+32+35+44+45+47+48+49+52+54

19: 1+3+4+5+7+9+10+12+13+16+19+21+24+26+27+29+32+35+38+43+49+54 < 6+15+18+23+30+33+34+37+41+44+46+47+51+53

21: 1+2+4+5+6+10+11+13+14+15+21+22+23+27+28+30+34+40+41+47+52+53 < 9+19+20+26+32+33+38+39+43+45+46+49+50+51

26: 1+2+3+5+6+7+8+9+14+15+16+17+18+19+20+30+31+32+33+44+45+46 < 13+27+28+29+40+41+42+43+52+53+54

a(55) = 4

18: 2+3+6+8+9+11+12+13+18+19+22+24+25+27+28+32+37+39+42+44+45+54 < 4+14+16+20+29+31+33+35+40+46+47+49+50+52+55

19: 1+2+3+4+7+9+10+12+13+16+20+23+25+26+28+31+35+38+40+43+45+52 < 6+15+18+22+30+32+34+37+42+46+48+49+51+54

21: 1+3+4+5+6+10+11+13+14+15+20+21+22+26+27+33+34+40+41+42+49+55 = 9+19+25+31+32+38+39+45+47+48+52+53+54

26: 1+2+4+5+6+7+8+9+14+15+16+17+18+19+29+30+31+32+46+47+48 = 13+26+27+28+40+41+42+43+44+45+55

a(56) = 4

18: 2+3+4+7+9+10+12+13+14+18+20+23+25+26+28+34+39+41+44+46+47+55 < 5+15+16+21+29+30+32+35+37+42+48+50+52+53+56

19: 1+3+4+5+8+10+11+13+14+16+19+21+24+26+27+28+32+37+40+42+45+47+52 < 7+18+23+29+31+34+36+39+44+49+51+54+55+56

21: 1+2+4+5+6+7+11+12+14+15+21+22+23+27+29+35+36+42+43+44+53+54 < 10+19+20+26+32+33+34+40+41+47+48+49+52+56

26: 1+2+3+5+6+7+8+9+10+15+16+17+18+19+20+29+30+31+32+33+34+48+49+56 = 14+27+28+42+43+44+45+46+47+53+54+55

a(57) = 4

18: 2+3+4+7+9+10+12+14+18+19+22+24+27+32+35+36+39+43+45+49+51 < 5+13+16+21+26+28+31+34+38+41+42+48+50+53+56

21: 1+3+4+5+8+10+11+14+16+18+20+21+25+29+31+35+38+40+41+46+51+53+57 < 7+15+19+24+26+30+36+37+39+42+45+47+48+52+55+56

25: 1+2+4+5+6+7+11+12+13+15+18+21+23+24+26+29+32+34+37+41+44+45+48+55 = 10+20+22+31+33+36+40+43+47+51+53+54+56+57

33: 1+2+3+5+6+7+8+9+10+13+15+16+17+19+20+22+26+28+30+31+33+36+42+47+56 = 18+29+32+35+41+44+45+46+49+51+55+57

a(58) = 4

17: 2+3+4+8+9+10+12+13+14+21+22+25+26+27+29+30+37+42+43+45+46+47+56 < 5+15+16+20+31+32+33+35+36+41+48+49+51+52+53+55

20: 1+3+4+5+7+10+11+13+14+16+19+20+24+27+28+30+33+36+40+41+44+47+53 = 8+17+21+25+31+37+38+42+45+48+50+51+56+57

21: 1+2+4+5+6+8+11+12+14+15+17+20+23+25+28+29+31+35+38+41+45+51+55+57 < 10+19+22+27+33+34+37+40+43+47+49+50+53+54+56

26: 1+2+3+5+6+7+8+9+10+15+16+17+18+19+21+22+31+32+33+34+37+48+49+50 < 14+28+29+30+41+44+45+46+47+55+57+58

Share: