Two Fake Coins

You are given N coins such that two of them are fake and the other coins are genuine. Real coins weigh the same. Fake coins weigh the same and are lighter than real ones. You need to find both fake coins using a balance scale in the smallest number of weighings.

It is easy to estimate the number of weighings from below using information theory. Given N coins you will have N choose 2 different answers. The number of possible answers has to be not more than 3w, where w is the number of weighings. This generates a trivial information-theoretic bound (ITB) on the number of coins that can be processed in a given number of weighings.

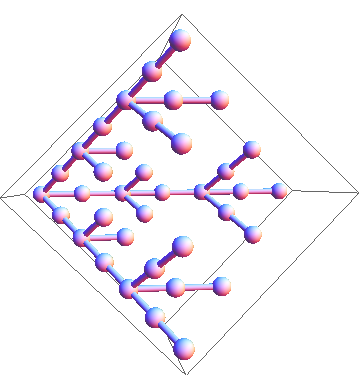

Previous authors used computers to completely analyze small numbers of weighings: from 2 to 5.

My colleagues from Russia, Konstantin Knop and Oleg Polubasov, performed some fantastic programming accompanied with some subtle heuristic and found optimal solutions for up to 10 weighings. For 11 and 12 weighings, the program is not efficient enough to find the optimal solutions: it found some solutions. Surprisingly, for up to 10 weighings, the optimal solutions match the information-theoretic bound (ITB). The results are in the table below. The first row represents the number of weighings w. The second row is the largest number of coins N for which a solution is found. The last row is the information-theoretic bound (ITB) we explained above.

| w | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|

| N | 22 | 38 | 66 | 115 | 198 | 344 | 585 | 1026 |

| ITB | 22 | 38 | 66 | 115 | 198 | 344 | 595 | 1031 |

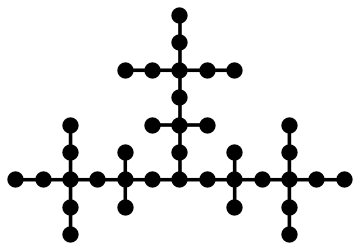

Their paper, Two counterfeit coins revisited, is available in Russian. Lucky for us, 70 of 73 pages are pseudo-code of solutions for which you do not need any Russian. You just need to understand the pseudo-code. Here is the explanation.

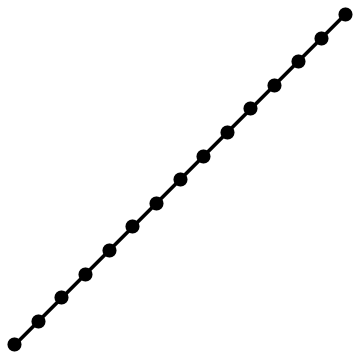

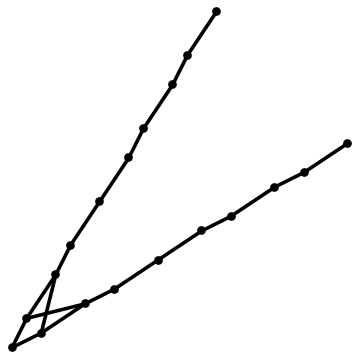

Each line begins with its number. After it they have the weighing in the format 1 10 v 4 5 meaning coins 1 and 10 are weighed versus coins 4 and 5. Each weighing is followed by a colon, after which they describe in order actions for three different outcomes of the weighing: equality, the first pan is lighter, and the second pan is lighter. Each action is one of the following:

- => L means go to line L.

- (a, b) means the fake coins are a and b.

- – means this branch is impossible and there is no output.

- => 23. sym indicates the symmetry of the weighing and its result, therefore the resulting go-to line, 23, is omitted as being equivalent to another line.

- – . sym means the output is symmetric to another one.

The line numbers after => in line L are always 3L+1, 3L+2 and 3L+3. The sym symbol implies that line 3L+3 is omitted as a symmetric version of line 3L+2.

For example, here is their solution for 2 weighings and 4 coins in their pseudo-code. An empty line separates different weighings:

0. 1 v 2 : => 1, => 2, => 3. sym

1. 1 v 3 : – , (1, 2), (3, 4).

2. 3 v 4 : – , (1, 3), – . sym

Another solution for 3 weighings and 7 coins:

0. 1 2 v 3 4 : => 1, => 2, => 3. sym

1. 1 v 2 : => 4, => 5, => 6. sym

2. 1 v 2 : (1, 2), => 8, => 9. sym

4. 5 v 6 : (5, 6), (5, 7), – . sym

5. 3 v 4 : – , (1, 3), – . sym

8. 5 v 6 : (1, 7), (1, 5), – . sym

If you want to see an optimal solution for 10 weighings, look at the paper: the algorithm takes 36 pages.

Share: