Propagation Networks: A Flexible and Expressive Substrate for Computation

My son, Alexey Radul, made his PhD thesis available to the public.

My son, Alexey Radul, made his PhD thesis available to the public.

I was amazed at how much he had invested in the thesis. I assumed that the main goal for a dissertation in computer science is to write a ground-breaking code and that the accompanying text is just a formality. However, this is not the case with my son’s thesis. I am not fully qualified to appreciate the “ground-breakness” of his code, but his thesis text is just wonderful.

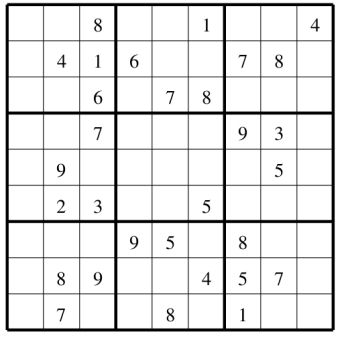

Alexey decided that he wanted to make his thesis accessible to a wide audience. He had to make a lot of choices and decisions while he was designing and coding his prototype, and in his thesis he devoted a lot of effort to explaining this process. He also tried to entertain: I certainly had fun trying to solve an evil sudoku puzzle on page 87, that turned out not to have a unique solution.

In addition to everything else, Alexey is an amazing writer.

I am a proud mother and as such I am biased. So I’ll let his thesis speak for itself. Below is the table of contents accompanied by some quotes:

- Time for a Revolution

“Revolution is at hand. The revolution everyone talks about is, of course, the parallel hardware revolution; less noticed but maybe more important, a paradigm shift is also brewing in the structure of programming languages. Perhaps spurred by changes in hardware architecture, we are reaching away from the old, strictly time-bound expression-evaluation paradigm that has carried us so far, and looking for new means of expressiveness not constrained by over-rigid notions of computational time.”

- Expression Evaluation has been Wonderful

- But we Want More

- We Want More Freedom from Time

- Propagation Promises Liberty

“Fortunately, there is a common theme in all these efforts to escape temporal tyranny. The commonality is to organize computation as a network of interconnected machines of some kind, each of which is free to run when it pleases, propagating information around the network as proves possible. The consequence of this freedom is that the structure of the aggregate does not impose an order of time. Instead the implementation, be it a constraint solver, or a logic programming system, or a functional reactive system, or what have you is free to attend to each conceptual machine as it pleases, and allow the order of operations to be determined by the needs of the solution of the problem at hand, rather then the structure of the problem’s description.”

- Design Principles

- Propagators are Asynchronous, Autonomous, and Stateless

- We Simulate the Network until Quiescence

- Cells Accumulate Information

- Core Implementation

- Numbers are Easy to Propagate

- Propagation can Go in Any Direction

- We can Propagate Intervals Too

- Generic Operations let us Propagate Anything!

- Dependencies

“Every human harbors mutually inconsistent beliefs: an intelligent person may be committed to the scientific method, and yet have a strong attachment to some superstitious or ritual practices. A person may have a strong belief in the sanctity of all human life, yet also believe that capital punishment is sometimes justified. If we were really logicians this kind of inconsistency would be fatal, because were we to simultaneously believe both propositions P and NOT P then we would have to believe all propositions! Somehow we manage to keep inconsistent beliefs from inhibiting all useful thought. Our personal belief systems appear to be locally consistent, in that there are no contradictions apparent. If we observe inconsistencies we do not crash—we chuckle!”

- Dependencies Track Provenance

- Dependencies Support Alternate Worldviews

- Dependencies Explain Contradictions

- Dependencies Improve Search

- Expressive Power

- Dependency Directed Backtracking Just Works

- Probabilistic Programming Tantalizes

- Constraint Satisfaction Comes Naturally

“This is power. By generalizing propagation to deal with arbitrary partial information structures, we are able to use it with structures that encode the state of the search as well as the usual domain information. We are consequently able to invert the flow of control between search and propagation: instead of the search being on top and calling the propagation when it needs it, the propagation is on top, and bits of search happen as contradictions are discovered. Even better, the structures that track the search are independent modules that just compose with the structures that track the domain information.”

- Logic Programming Remains Mysterious

- Functional Reactive Programming Embeds Nicely

- Rule-based Systems Have a Special Topology

- Type Inference Looks Like Propagation Too

- Towards a Programming Language

- Conditionals Just Work

- There are Many Possible Means of Abstraction

- What Partial Information to Keep about Compound Data?

- Scheduling can be Smarter

- Propagation Needs Better Garbage Collection

- Side Effects Always Cause Trouble

- Input is not Trivial Either

- What do we Need for Self-Reliance?

- Philosophical Insights

“A shift such as from evaluation to propagation is transformative. You have followed me, gentle reader, through 137 pages of discussions, examples, implementations, technicalities, consequences and open problems attendant upon that transformation; sit back now and reflect with me, amid figurative pipe smoke, upon the deepest problems of computation, and the new way they can be seen after one’s eyes have been transformed.”

- On Concurrency

“The “concurrency problem” is a bogeyman of the field of computer science that has reached the point of being used to frighten children. The problem is usually stated equivalently to “How do we make computer languages that effectively describe concurrent systems?”, where “effectively” is taken to mean “without tripping over our own coattails”. This problem statement contains a hidden assumption. Indeed, the concurrency itself is not difficult in the least—the problem comes from trying to maintain the illusion that the events of a concurrent system occur in a particular chronological order.”

- Time and Space

“Time is nature’s way of keeping everything from happening at once; space is nature’s way of keeping everything from happening in the same place ( with apologies to Woody Allen, Albert Einstein, and John Archibald Wheeler, to whom variations of this quote are variously attributed).”

- On Side Effects

- On Concurrency

- Appendix A: Details

“I’m meticulous. I like to dot every i and cross every t. The main text had glossed over a number of details in the interest of space and clarity, so those dots and cross-bars are collected here.”

MikeSP:

It’s funny that not too long ago I was saying to myself, “boy – I really wish I could WRITE something to correctly compute the constraint density = mass / volume, given two out of the three values” with a language that supports constraint propagation (LZX from OpenLaszlo). Even then, that framework didn’t support something so basic as this since you could either bind or compute via constraint (your son has disentagled this in this thesis I believe). A friend of mine wrote special code on top of the constraint processor to do just this – to your son, it’s native.

Kudos to him!!

(And hope all’s well with you T! ^_^)

MikeSP

17 September 2009, 8:09 am