What is base 3/2? One of the ways to define such a base is to think of it in terms of exploding dots. What the heck are exploding dots? They are explained and popularized by James Tanton in his YouTube videos.

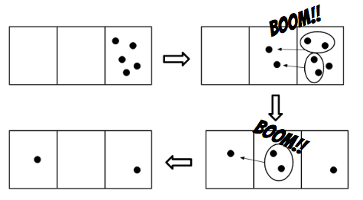

Essentially, “exploding dots” is a machine made of a row of boxes with rules describing how the dots loaded into the machine explode. As an example, let me describe the 1←2 machine, which corresponds to base 2. We load N dots into the rightmost box. Whenever there are 2 dots in one box, they explode into 1 dot in the box to the left.

For example, to write 5 in base 2, we would first load 5 dots in the rightmost box, as in the figure above. Then each group of 2 dots in the rightmost box would explode, and for each group, 1 dot would appear in the box to the left. Finally, the 2 dots in the second box would explode into 1 dot into the next box to the left. By reading the number of dots from left to right, we get 101, which is 5 in base 2.

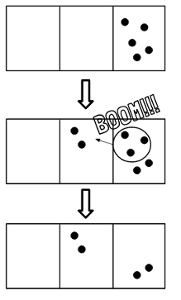

The interesting thing here is that there is no reason this model should be exclusive to integer bases. Suppose our rule is that 3 dots explode into 2 dots in the box to the left. Such a rule is called the 2←3 machine, and it corresponds to base 3/2. To represent 5 in this base, we load 5 dots into the rightmost box, then we use the exploding rule shown in the figure below. Using this machine, 5 is represented in base 3/2 as 22.

The figures were made by my junior PRIMES STEP group, in the 2017-2018 academic year, for our paper, Variants of Base 3 Over 2.

But, in this post, I want to discuss a different paper from the same academic year. With my senior PRIMES STEP group, we wrote a paper On Base 3/2 and its Sequences. A shorter version which includes a tribute to John Conway, appeared in The Mathematical Intelligencer.

Speaking of John Conway, he liked inventing new sequences, especially ones with unusual behaviors. One of his hobbies was tweaking the Fibonacci rule to create new sequences, which he called Fibs. For example, the sorted Fibs sequence starts the same as the Fibonacci sequence with 0 and 1. To calculate the next term, we add the two previous terms and sort the digits in non-decreasing order. In base 10, this sequence is A069638: 0, 1, 1, 2, 3, 5, 8, 13, 12, 25, 37, 26, …. It is known that this sequence is periodic with a maximum value of 667.

With my senior PRIMES STEP group, we studied analogs of this sequence in base 3/2. We begin with the sorted Fibs sequence fn with the same two initial values that start the Fibonacci sequence: f0 = 0 and f1 = 1. To calculate fn+1, we add fn-1 and fn in base 3/2 and sort the digits in non-decreasing order. It follows that the numbers in the sequence are written as several ones followed by several twos. Unlike base 10, the sequence is not periodic and grows indefinitely: 0, 1, 1, 2, 2, 12, 12, 112, 112, 1112, 1112, 11112, …. In recognition of the constant growth of this Fibs sequence, we call it the Pinocchio sequence.

Obviously, you can start the sorted Fibs sequence with any two numbers. But we proved an interesting theorem which stated that any sorted Fibs sequence eventually turns into either the tail of the Pinocchio sequence or the 3-cycle 112, 1122, 1122.

However, we didn’t stop there. There are two natural ways to sort the digits of a number, in increasing or decreasing order. Naturally, there is another type of sequences worth considering, in which the digits are sorted in non-increasing order. We called such sequences the reverse sorted Fibs.

We defined the reverse sorted Fibs sequence rn in base 3/2 as follows. To calculate rn+1, we add rn-1 and rn in base 3/2 and sort the digits in non-increasing order, ignoring zeros. It follows that after the initial terms, the terms of such a sequence are represented with several twos followed by several ones. We call the reverse sorted Fibs that start in a similar way to the Fibonacci sequence with r0 = 0 and r1 = 1, the proper reverse sorted Fibs. Here are several terms of the proper reverse sorted Fibs: 0, 1, 1, 2, 2, 21, 21, 221, 2211, 221, 221, 2211, 221, 221, 2211, …. This sequence becomes cyclic, starting from r7.

We also found one reverse sorted Fibs growing indefinitely: 2211, 2211, 22211, 22211, 222211, 222211, and so on. We proved that any reverse sorted Fibs sequence eventually turns into either this sequence or a 3-cycle sequence: 221, 221, 2211. The similarity between the sorted Fibs and the reverse sorted Fibs surprised us. Up to the initial terms, they both have exactly one sequence which grows indefinitely and one 3-cycle. To emphasize this similarity, we reversed the word Pinocchio and named this growing reverse Fibs sequence the Oihcconip sequence.

Now I need to figure out how to pronounce the name of this new sequence.

Share: